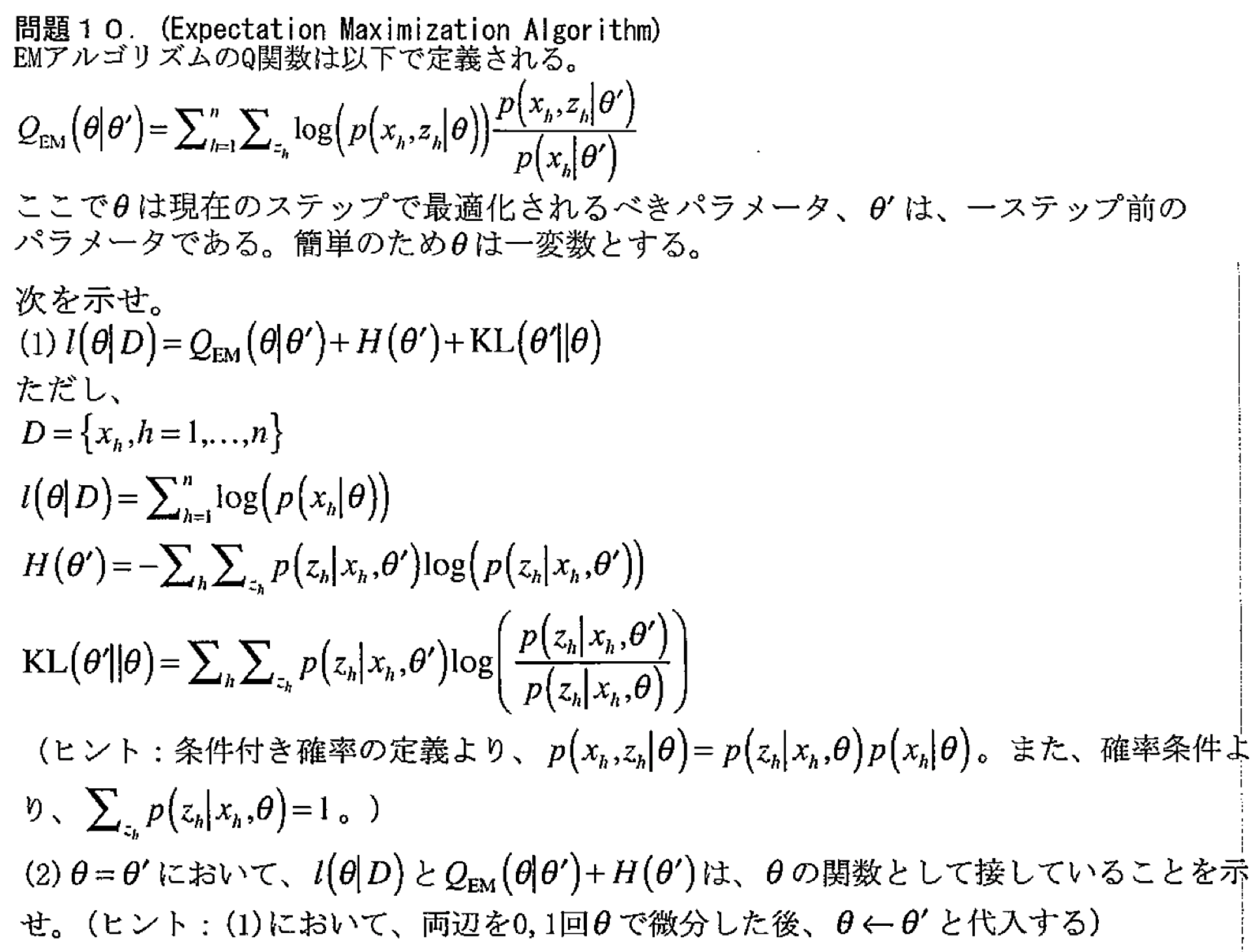

解答

1

$$

\begin{aligned}

&\mathcal{Q}_{\text{EM}}\left(\theta|\theta^{\prime}\right) + H\left(\theta^{\prime}\right) + \mathrm{KL}\left(\theta^{\prime}\|\theta\right)\\

=&\sum_{h=1}^n\sum_{z_h}\log\left(p\left(x_h,z_h|\theta\right)\right)\frac{p\left(x_h,z_h|\theta^{\prime}\right)}{p\left(x_h|\theta^{\prime}\right)} - \sum_{h=1}^n\sum_{z_h}p\left(z_h|x_h,\theta^{\prime}\right)\log\left(p\left(z_h|x_h,\theta^{\prime}\right)\right)\\

&+ \sum_{h=1}^n\sum_{z_h}p\left(z_h|x_h,\theta^{\prime}\right)\log\left(\frac{p\left(z_h|x_h,\theta^{\prime}\right)}{p\left(z_h|x_h,\theta\right)}\right)\\

=&\sum_{h=1}^n\sum_{z_h}\log\left(p\left(x_h,z_h|\theta\right)\right)\frac{p\left(x_h,z_h|\theta^{\prime}\right)}{p\left(x_h|\theta^{\prime}\right)} - \sum_{h=1}^n\sum_{z_h}p\left(z_h|x_h,\theta^{\prime}\right)\log\left(p\left(z_h|x_h,\theta\right)\right)\\

=&\sum_{h=1}^n\sum_{z_h}\log\left(p\left(x_h,z_h|\theta\right)\right)p\left(z_h|x_h,\theta^{\prime}\right) - \sum_{h=1}^n\sum_{z_h}p\left(z_h|x_h,\theta^{\prime}\right)\log\left(p\left(z_h|x_h,\theta\right)\right)\quad\left(\because\text{

Conditional probability}\right)\\

=&\sum_{h=1}^n\sum_{z_h}p\left(z_h|x_h,\theta^{\prime}\right)\log\left(p\left(x_h|\theta\right)\right)\quad\left(\because\text{

Conditional probability}\right)\\

=&\sum_{h=1}^n\log\left(p\left(x_h|\theta\right)\right)\sum_{z_h}p\left(z_h|x_h,\theta^{\prime}\right)\\

=&\sum_{h=1}^n\log\left(p\left(x_h|\theta\right)\right)\quad\left(\because\text{

Marginalization}\right)\\

=&l\left(\theta|D\right)

\end{aligned}

$$

2

$$

\begin{aligned}

\mathrm{KL}\left(\theta^{\prime}\|\theta\right)|_{\theta=\theta^{\prime}}

&= \sum_{h=1}^n\sum_{z_h}p\left(z_h|x_h,\theta^{\prime}\right)\log\left(\frac{p\left(z_h|x_h,\theta^{\prime}\right)}{p\left(z_h|x_h,\theta^{\prime}\right)}\right)\\

&= \sum_{h=1}^n\sum_{z_h}p\left(z_h|x_h,\theta^{\prime}\right)\cdot\log(1) = 0\\

\end{aligned}

$$

より、\(\theta=\theta^{\prime}\) で \(l\left(\theta|D\right) = \mathcal{Q}_{\text{EM}}\left(\theta|\theta^{\prime}\right)+H\left(\theta^{\prime}\right)\)

また、

$$

\begin{aligned}

\frac{\partial}{\partial\theta}l\left(\theta|D\right)|_{\theta=\theta^{\prime}}

&= \sum_{h=1}^n\frac{\partial}{\partial\theta}\left(\log\left(p\left(x_h|\theta\right)\right)\right)|_{\theta=\theta^{\prime}}\\

&=\sum_{h=1}^n\frac{1}{p\left(x_h|\theta^{\prime}\right)}\frac{\partial}{\partial\theta}\left(p\left(x_h|\theta\right)\right)|_{\theta=\theta^{\prime}}\\

\frac{\partial}{\partial\theta}\left(\mathcal{Q}_{\text{EM}}\left(\theta|\theta^{\prime}\right) + H\left(\theta^{\prime}\right)\right)|_{\theta=\theta^{\prime}}

&=\sum_{h=1}^n\sum_{z_h}\frac{\frac{\partial}{\partial\theta}\left(p\left(x_h,z_h|\theta\right)\right)}{p\left(x_h,z_h|\theta\right)}|_{\theta=\theta^{\prime}}\frac{p\left(x_h,z_h|\theta^{\prime}\right)}{p\left(x_h|\theta^{\prime}\right)}\\

&= \sum_{h=1}^n\frac{1}{p\left(x_h|\theta^{\prime}\right)}\sum_{z_h}\frac{\partial}{\partial\theta}\left(p\left(x_h,z_h|\theta\right)\right)|_{\theta=\theta^{\prime}}\\

&=\sum_{h=1}^n\frac{1}{p\left(x_h|\theta^{\prime}\right)}\frac{\partial}{\partial\theta}\left(p\left(x_h|\theta\right)\right)|_{\theta=\theta^{\prime}}

\end{aligned}

$$

となるので、\(\theta\) に関する一階微分に関しても先の等式が成り立つ。

ゆえに、題意が成り立つ。