import sys

sys.path.append("path/to/library/")

Part 1: STDP and its varities¶

STDP alters the weights of synapses depending on the relative spike timing between the postsynaptic neuron and the presynaptic neuron.

_STDP overview (from Sjöström J, Gerstner W (2010) Spike-timing dependent plasticity. Scholarpedia)_

- Within some time windows:

- if "PRE before POST", we get Long-Term Potentiation (LTP)

- if "POST before PRE", we get Long-Term Depression (LTD)

- The time windows for LTP and LTD are generally on the order of tens of milliseconds.

- The LTD window is generally longer than the LTP window.

Implementation¶

- To implement STDP in spiking neural network models, different STDP rules can be used.

- In general, different rules result in different distributions of synaptic weights over the course of learning.

- Examples of such weight distributions are shown below.

STDP rules (from Gilson M, Fukai T (2011) Stability versus Neuronal Specialization for STDP. PLoS ONE)

- Additive STDP tends to produce competition between synapses but needs to have its weights bound to ensure network stability (e.g. to avoid over-excitation)

- Multiplicative STDP tends to produce less competition between synapses but owing to its weight-dependent manipulation of synaptic weights does not require artificial bounds.

- Logorithmic STDP attempts to reconcile the two.

Additive STDP rule¶

In the following exercise, we will use the additive STDP rule, sometimes also called the exponential STDP rule:

$$ \Delta w_j= \begin{cases} a^+ \cdot e^{\big( \dfrac{t_j-t_i}{\tau^+} \big)} & \text{if}\ t_j \leq t_i & \text{(LTP)} \\ -a^- \cdot e^{\big( - \dfrac{t_j-t_i}{\tau^-} \big)} & \text{if}\ t_j > t_i & \text{(LTD)} \end{cases} $$| variable | Meaning |

|---|---|

| $a^+$ | learning rate for LTP |

| $a^-$ | learning rate for LTD |

| $t_j$ | spike time of the presynaptic neuron $j$ |

| $t_i$ | spike time of the postsynaptic neuron $i$ |

| $\tau^+$ | time constant for LTP (ms) |

| $\tau^-$ | time constant for LTD (ms) |

Using pre-defined paramaters which we will use in the second part of this tutorial, let's plot **"how the this STDP rule changes weights depending on the timing of presynaptic and postsynaptic spikes."**

import numpy as np

import matplotlib.pyplot as plt

from OIST.tutorial4 import AddSTDP

add_STDP_paramaters = {

'a_LTP' : 0.03125,

'a_LTD' : 0.85 * 0.03125,

'tau_LTP' : 16.8,

'tau_LTD' : 33.7

}

model = AddSTDP()

model.load_params(**add_STDP_paramaters)

time_differences = np.linspace(-125,125,1000)

model.simulate(time_differences)

Exercise 1

- What features do you notice in this STDP curve?

- Experiment with different values in

add_STDP_paramaters. How do each of the values change the shape of the STDP curve? - How, in a network of neurons, could this STDP rule lead to over-excitation or network instability?

Part 2: Unsupervised pattern-detection using STDP¶

In this part of the tutorial we will be replicating the results from [Masquelier et al. (2008) Spike Timing Dependent Plasticity Finds the Start of Repeating Patterns in Continuous Spike Trains. PLoS ONE](https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0001377). (In fact, we already reproduced Figure 2 from their paper in the first part of this tutorial.)

- Masquelier et al. (2008) create a spiking neural network with

- 2,000 presynaptic neurons

- just one postsynpatic neuron.

- They implement additative STDP in this network, which allows the "postsynaptic neuron" to learn to detect the beginning of repeating patterns of spiking activity arriving from the 2,000 presynaptic neurons.

- Importantly, the presynaptic neurons also have periods of random, non-patterned activity and have additional noisy spiking activity at all times.

Let's begin by constructing the spike trains of the 2,000 presynaptic neurons.

We will run our simulations for 450s, so we will create 450s worth of spike trains for these neurons.

The first step is construct random activity with the following properties:

- Each presynpatic neuron will emit spikes independently

- generated by a Poisson process with a variable instantaneous firing rate $r$

- $r$ varies randomly beteen $0$ and $90$ Hz.

- The rate of change in $r$ is modified by $dr$

- $dr$ starts at $0$

- $dr$ is modified by $ds$, randomly picked from a uniform distribution over $[−360,+360]$ Hz/s.

- $dr$ is clipped to within $[-1800,1800]$ Hz/s

- we will also manually add some additional spikes to guarantee that "in every 50ms time bin, each neuron spikes at least once"

Make a training data¶

from OIST.tutorial4 import PoissonProcessGenerator, plotPatternRaster

poisson_params = {

"min_span": 50, # [ms]

"starting_Hz": 54, # [Hz]

"min_r": 0, # [Hz]

"max_r": 90, # [Hz]

"min_ds": -3.6e-1, # [Hz/ms]

"max_ds": 3.6e-1, # [Hz/ms]

"min_dr": -1.8, # [Hz/ms]

"max_dr": 1.8, # [Hz/ms]

"segment_length": 50, # [ms] Length of 1 Presynaptic spiking segment length.

}

seed = 0

num_presynaptic_neurons = 2000 # Number of the presynaptic neurons.

simulation_length = 1000 # [ms]

poisson_generator = PoissonProcessGenerator()

poisson_generator.load_params(**poisson_params)

spikes = poisson_generator.generate_spikes(simulation_length, num_presynaptic_neurons, seed=seed)

Now we will

- Divide the presynaptic spiking activity into segments of 50ms

- Select a random 50ms segment to be considered as the "pattern"

- Copy and paste this pattern across the raster plot in a certain proportion of segments.

- Add 10Hz Noises to all neurons at all times.

Divide and Select "Pattern"¶

poisson_generator.select_segment(seed=seed)

fig,ax = plt.subplots(figsize=(5,5))

ax = plotPatternRaster(spikes, ax=ax)

plt.tight_layout()

plt.show()

Copy and Paste this "Pattern"¶

proportion_of_neurons=0.5

frequency_of_patterns=0.25

pre_spikes_wrepeats, output_identities = poisson_generator.repeat_segment(proportion_of_neurons, frequency_of_patterns)

Add Poisson Noises¶

frequency = 10 # [Hz]

noise_added_spikes = poisson_generator.addPoisonNoise(frequency=frequency)

fig,ax = plt.subplots(figsize=(5,5))

ax = plotPatternRaster(noise_added_spikes, ax=ax)

plt.tight_layout()

plt.show()

Firing Rates¶

from OIST.tutorial4 import plotFiringRates

fig = plt.figure(figsize=(12,4))

fig = plotFiringRates(noise_added_spikes, fig=fig)

plt.show()

Check the Repeated Position¶

fig,ax = plt.subplots(figsize=(5,5))

ax = plotPatternRaster(noise_added_spikes, ax=ax)

ax = plotPatternRaster(output_identities, cmap="RdGy_r", ax=ax, alpha=0.7)

plt.tight_layout()

plt.show()

- With these paramaters, we can embed a repeating pattern within some pseduo-random, spontaneous activity.

- However, learning takes time! And we have only been simulating 1s of activity.

- In this task, the authors reported they needed 13.5s of activity (or 700 pattern presentations) for the neuron to show signs of learning to recognise the pattern.

Figure 4 from Masquelier et al. 2008: Overview of the 450 s simulation.

Here we plotted the membrane potential as a function of simulation time, at the beginning, middle, and end of the simulation. Grey rectangles indicate pattern presentations. (a) At the beginning of the simulation the neuron is non-selective because the synaptic weights are all equal. It thus fires periodically, both inside and outside the pattern. (b) At t≈13.5 s, after about 70 pattern presentations and 700 discharges, selectivity to the pattern is emerging: gradually the neuron almost stops discharging outside the pattern (no false alarms), while it does discharge most of the time the pattern is present (high hit rate), here even twice (c) End of the simulation. The system has converged (by saturation). Postsynaptic spike latency is about 4 ms. Hit rate is 99.1% with no false alarms (estimated on the last 150 s).

- It is therefore necessary to use some pre-computed spontaneous activity prepared earlier, found in the file

spont_100s_alt.npy. - It contains 100s of pseduo-random, spontaneous activity for us to use in our simulations.

- Warning, it is a large file at ~1.6GB!

$ wget --content-disposition https://www.dropbox.com/sh/p9ml6l402znbzmy/AAA2LP7W1bcvwSgYnmx9ahyga?dl=0&preview=spont_100s_alt.npy

# Pattern1. Download pre-computed files.

pre_spikes_wrepeats_100s = np.load("path/to/spont_100s_alt.npy")

poisson_generator = PoissonProcessGenerator()

poisson_generator.load_params(**poisson_params)

poisson_generator.memorize(pre_spikes_wrepeats_100s, repeated=False)

# Pattern2. Make by myself.

simulation_length_100s = 100_000

num_presynaptic_neurons = 2_000

poisson_generator = PoissonProcessGenerator()

poisson_generator.load_params(**poisson_params)

pre_spikes_wrepeats_100s = poisson_generator.generate_spikes(simulation_length_100s, num_presynaptic_neurons, seed=seed)

# Pattern.1

pre_spikes_wrepeats_100s = np.load("path/to/spont_100s_alt.npy")

poisson_generator = PoissonProcessGenerator()

poisson_generator.load_params(**poisson_params)

poisson_generator.memorize(pre_spikes_wrepeats_100s, repeated=False)

proportion_of_neurons = 0.5

frequency_of_patterns = 0.25

# Repeat & Paste.

poisson_generator.select_segment(seed=seed)

pre_spikes_wrepeats, output_identities = poisson_generator.repeat_segment(proportion_of_neurons, frequency_of_patterns)

noise_added_spikes = poisson_generator.addPoisonNoise(frequency=frequency)

repeated_idxes = np.any(poisson_generator.repeated_position, axis=1)

print(f"Pattern shape = {poisson_generator.pattern_spikes.shape}")

print(f"Poisson Process shape = {noise_added_spikes.shape}")

Now we are ready to use these spike trains to train a postsynaptic neuron! ... or are we?

- Creating a model conceptually or mathematically is just the first step.

- Next we have to figure out how to simulate it computationally.

NEST simulation¶

import nest

NEST contains many STDP synapse rules. Which one should we use?

- We said earlier that we will use an additive/exponential STDP rule, however that is not the only parameter to consider.

- Another consideration is "how to pair two continuous spikes trains" such that we can apply STDP appropriately to all presynaptic spikes $j$ coming to the postsynaptic neuron $i$.

- A list of STDP models implemented in NEST can be found here: https://nest-simulator.readthedocs.io/en/latest/models/stdp.html

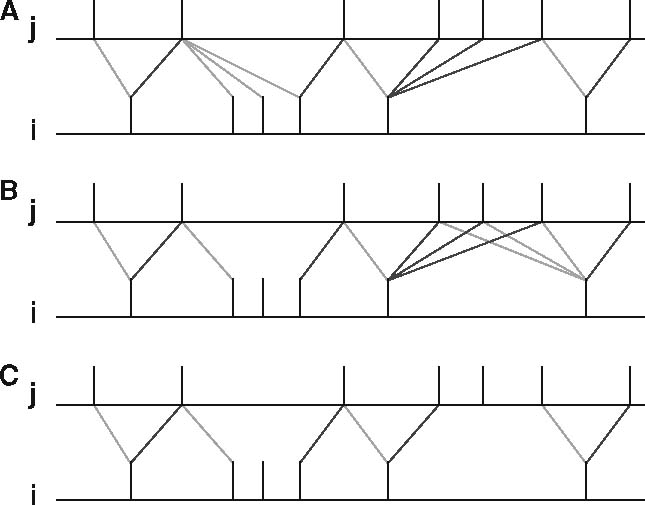

- The following models correspond to the below figure for pairing of spikes for STDP:

stdp_nn_symm_synapse(panel A)stdp_nn_pre-centered_synapse(panel B)stdp_nn_restr_synapse(panel C)

Figure 7 from Morrison et al. 2008.

Examples of nearest neighbor spike pairing schemes for a presynaptic neuron j and a postsynaptic neuron i. In each case, the dark gray indicate which pairings contribute toward depression of a synapse, and light gray indicate which pairings contribute toward potentiation.

Figure 7 from Morrison et al. 2008.

Examples of nearest neighbor spike pairing schemes for a presynaptic neuron j and a postsynaptic neuron i. In each case, the dark gray indicate which pairings contribute toward depression of a synapse, and light gray indicate which pairings contribute toward potentiation.

- [Masquelier et al. (2008)](https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0001377) uses something akin to panel C

- In the (unreleased) NEST 3.0 there is a

stdp_nn_restr_synapsemodel which is quite similar. (However, we are using NEST 2.X. now.) - After going through the available STDP models, none appeared to be capable of replicating the paper due to this paper requiring a particular spike-pairing method which doesn't seem to be represented in any of the currently-implemented STDP models in NEST.

- Therefore, we need to make some changes to our experiment!

- But before we do that, let's simulate the current model using some different synapses which are available to us.

- Start with the static synapse and then experiment with some STDP synapse models to see what goes wrong and "how different paramaters affect the results."

# Check what model we can use.

nest.Models('synapses')

from OIST.tutorial4 import NeuronalNetwork

simulation_times = 1000

spike_indicies = [np.where(is_spike)[0] for is_spike in noise_added_spikes==1]

STDP_paramaters = {

'a_LTP' : 0.03125,

'a_LTD' : 0.85 * 0.03125,

'tau_LTP' : 16.8,

'tau_LTD' : 33.7,

}

model = NeuronalNetwork(**STDP_paramaters)

model.build(spike_indicies)

model.compile(synapse_model="static", ex=1)

ax = model.simulate(T=simulation_times, color="black")

ax = model.mask_pattern(ax, output_identities, simulation_times)

plt.show()

ax = model.plotWeights(repeated_idxes)

plt.show()

model.compile(synapse_model="standard", ex=1)

ax = model.simulate(T=simulation_times, color="black")

ax = model.mask_pattern(ax, output_identities, simulation_times)

plt.show()

ax = model.plotWeights(repeated_idxes)

plt.show()

model.compile(synapse_model="triplet", ex=1)

ax = model.simulate(T=simulation_times, color="black")

ax = model.mask_pattern(ax, output_identities, simulation_times)

plt.show()

ax = model.plotWeights(repeated_idxes)

plt.show()

model.compile(synapse_model="FACETS", ex=1)

ax = model.simulate(T=simulation_times, color="black")

ax = model.mask_pattern(ax, output_identities, simulation_times)

plt.show()

ax = model.plotWeights(repeated_idxes)

plt.show()

Couldn't recognize the patterns.

Exercise 2

- What are some general problems with the STDP synapses?

- How do these synapse models cause failure of our model to replicate the original paper?

- What can we do to the task or the model which might help?

model = NeuronalNetwork(**STDP_paramaters)

model.build(spike_indicies)

gaussian_params = {

"simulation_length_pulses": 10000.0,

"proportion_of_neurons_pulsing": 0.5,

"number_of_pulses": 50,

"spikes_per_pulse": 5,

"std_spikes_per_pulse": 5.0

}

# Overwrite the spike generator.

noise_rate = 35.0 # firing rate of Poisson neurons (Hz)

model.spike_generator = nest.Create("poisson_generator", model.num_presynaptic_neurons, params={'rate': noise_rate})

# Add the gaussian Packets as an additional input.

model.addGaussianPackets(**gaussian_params)

model.compile(synapse_model="standard", ex=2)

ax = model.simulate(T=simulation_times, color="black")

ax = model.mask_pattern(ax, output_identities, simulation_times)

plt.show()

ax = model.plotWeights(repeated_idxes)

plt.show()

Difficult to find a best parameter :(

→ How about usingBayesian optimization ??

【Challenge Exercise】

Consider whether and how your network could learn multiple patterns, e.g. would you need to add more post-synaptic neurons to the network? Hint: see [Masquelier et al. (2009) Competitive STDP-Based Spike Pattern Learning. Neural Computation](https://www.ncbi.nlm.nih.gov/pubmed/19718815).